Keploy for Reliable and Repeatable API Testing

In today's fast-paced digital landscape, software reliability has become paramount to business success. Organizations cannot afford system failures, downtime, or performance degradation that could result in revenue loss, damaged reputation, or compromised user experience. This comprehensive guide explores the fundamentals of reliability testing, methodologies, tools, and best practices that ensure software systems perform consistently under various conditions.

Understanding Reliability Testing

Reliability testing is a systematic approach to evaluate how well a software system performs its intended functions under specified conditions for a predetermined period. Unlike functional testing, which focuses on whether features work correctly, reliability testing examines the system's ability to maintain consistent performance over time, handle stress conditions, and recover from failures gracefully.

The primary objective of reliability testing is to identify potential failure points before they impact end users. This proactive approach helps development teams build more robust systems, reduce maintenance costs, and improve customer satisfaction. Modern reliability testing encompasses various dimensions including system stability, error handling, resource utilization, and performance consistency.

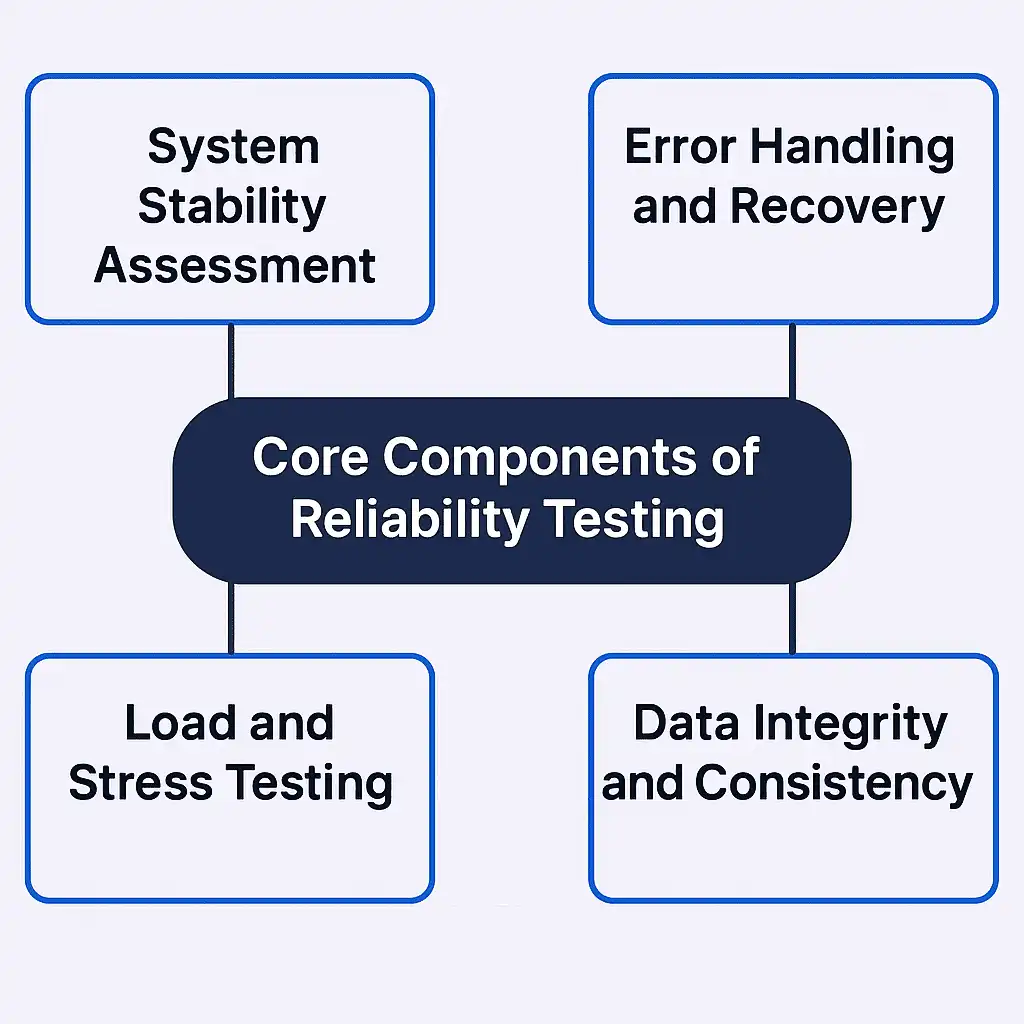

Core Components of Reliability Testing

System Stability Assessment

System stability forms the foundation of reliability testing. This involves running the application continuously for extended periods while monitoring resource consumption, memory leaks, and performance degradation. Stability testing helps identify issues that manifest only after prolonged operation, such as gradual memory accumulation or database connection pool exhaustion.

Error Handling and Recovery

Robust error handling mechanisms are crucial for system reliability. Testing should verify that the application gracefully handles unexpected inputs, network failures, database connectivity issues, and third-party service outages. The system should not only detect errors but also recover automatically when possible or provide meaningful feedback to users when manual intervention is required.

Load and Stress Testing

Understanding how systems behave under various load conditions is essential for reliability assessment. Load testing evaluates performance under expected user volumes, while stress testing pushes the system beyond normal operating conditions to identify breaking points. These tests reveal bottlenecks, resource limitations, and potential failure modes under high-demand scenarios.

Data Integrity and Consistency

Reliability testing must ensure data remains accurate and consistent throughout system operations. This includes validating transaction integrity, testing backup and recovery procedures, and verifying data synchronization across distributed systems. Any compromise in data integrity can have severe consequences for business operations.

Methodologies and Approaches

Statistical Reliability Analysis

Statistical methods provide quantitative measures of system reliability. Mean Time Between Failures (MTBF) and Mean Time To Repair (MTTR) are key metrics that help organizations understand system dependability. These metrics guide decision-making regarding maintenance schedules, resource allocation, and system design improvements.

Fault Injection Testing

Fault injection involves deliberately introducing failures into the system to evaluate its response. This technique helps identify weaknesses in error handling, recovery mechanisms, and system resilience. By simulating various failure scenarios, teams can validate their disaster recovery procedures and improve system robustness.

Automated Reliability Testing

Automation plays a crucial role in modern reliability testing. Automated test suites can run continuously, providing ongoing assessment of system reliability. This approach enables early detection of reliability issues and supports continuous integration practices. Automated testing also reduces human error and ensures consistent test execution.

Keploy: Transforming API Reliability Testing

Keploy represents a significant advancement in API reliability testing through its innovative approach to test generation and execution. This open-source testing platform automatically generates comprehensive test cases by capturing real API interactions, eliminating the traditional burden of manual test creation.

The platform's core strength lies in its ability to record actual API calls during development or staging environments, then replay these interactions to validate system behavior. This approach ensures that test scenarios reflect real-world usage patterns rather than theoretical test cases that might miss critical edge cases.

The tool's regression testing capabilities help maintain system reliability as code evolves. By automatically detecting when changes break existing functionality, Keploy prevents reliability regressions from reaching production environments. This proactive approach significantly reduces the risk of system failures and maintains consistent user experiences.

Furthermore, Keploy's integration with continuous integration pipelines ensures that reliability testing becomes an integral part of the development process rather than an afterthought. This shift-left approach to reliability testing enables teams to identify and address issues early in the development cycle, reducing both development costs and time to market.

Implementation Best Practices

Establishing Reliability Requirements

Successful reliability testing begins with clear requirements definition. Organizations must establish specific reliability targets, including acceptable failure rates, recovery time objectives, and performance thresholds. These requirements should align with business objectives and user expectations, providing measurable criteria for testing success.

Environment Configuration

Testing environments should closely mirror production systems to ensure accurate reliability assessments. This includes matching hardware specifications, network configurations, and data volumes. Discrepancies between test and production environments can lead to false confidence in system reliability.

Monitoring and Metrics

Comprehensive monitoring during reliability testing provides insights into system behavior and performance patterns. Key metrics include response times, error rates, resource utilization, and throughput. These measurements help identify trends, bottlenecks, and potential failure points before they impact users.

Iterative Testing Approach

Reliability testing should be iterative, with results feeding back into system improvements. Each testing cycle should build upon previous findings, gradually improving system reliability. This approach ensures continuous enhancement rather than one-time validation.

Challenges and Solutions

Scalability Testing Complexities

Testing system reliability at scale presents unique challenges. Simulating realistic user loads while maintaining test environment integrity requires sophisticated tooling and infrastructure. Cloud-based testing solutions can help address these challenges by providing scalable resources and realistic network conditions.

Test Data Management

Reliable testing requires consistent, representative test data. Managing test data across multiple environments and maintaining data privacy compliance adds complexity to reliability testing processes. Automated data generation and anonymization tools can help address these challenges.

Integration Testing Challenges

Modern applications rely heavily on external services and APIs. Testing reliability in these integrated environments requires careful coordination and sophisticated mocking strategies. Service virtualization and contract testing approaches can help manage these complexities.

Future Trends in Reliability Testing

AI-Driven Testing

Artificial intelligence is beginning to transform reliability testing through predictive analytics and intelligent test generation. Machine learning algorithms can analyze system behavior patterns to predict potential failures and optimize testing strategies.

Chaos Engineering

Chaos engineering principles are increasingly being applied to reliability testing. By deliberately introducing failures in controlled ways, teams can build more resilient systems and validate their recovery procedures.

Continuous Reliability

The shift toward continuous deployment requires continuous reliability assessment. Modern testing platforms are evolving to provide real-time reliability monitoring and automated remediation capabilities.

Conclusion

Reliability testing remains a critical discipline in software development, ensuring that systems meet user expectations and business requirements. As applications become more complex and distributed, the importance of comprehensive reliability testing continues to grow. Tools like Keploy are making reliability testing more accessible and effective, while emerging trends promise even greater capabilities in the future.

Organizations that invest in robust reliability testing practices will be better positioned to deliver high-quality software, maintain customer satisfaction, and achieve business success in an increasingly competitive digital landscape. The key lies in adopting a systematic approach, leveraging appropriate tools and methodologies, and maintaining a commitment to continuous improvement.

Frequently Asked Questions

1. What is the difference between reliability testing and performance testing?

While both testing types are related, they serve different purposes. Performance testing focuses on how fast a system responds under various load conditions, measuring metrics like response time, throughput, and resource utilization. Reliability testing, on the other hand, examines whether a system consistently performs its intended functions over time without failures. Reliability testing includes performance aspects but extends beyond to cover system stability, error handling, and recovery capabilities. A system might perform well under load but fail reliability tests if it crashes after running for several hours or doesn't handle errors gracefully.

2. How long should reliability testing cycles run to be effective?

The duration of reliability testing depends on several factors including system complexity, criticality, and usage patterns. For most applications, reliability testing should run for at least 24-48 hours of continuous operation to identify issues like memory leaks or resource exhaustion. Critical systems such as banking or healthcare applications may require weeks or months of testing. The key is to simulate realistic usage patterns and run tests long enough to observe system behavior under various conditions. Many organizations use a combination of short-term intensive tests and long-term stability tests to achieve comprehensive coverage.

3. Can reliability testing be fully automated, or is manual intervention necessary?

Reliability testing can be largely automated, but complete automation isn't always feasible or desirable. Automated tools excel at executing repetitive tests, monitoring system metrics, and detecting performance degradation. However, manual intervention is often necessary for test scenario design, result interpretation, and investigating complex failure modes. The most effective approach combines automated execution with human oversight for analysis and decision-making. Modern tools like Keploy are making automation more accessible by automatically generating test cases from real interactions, but human expertise remains crucial for comprehensive reliability assessment.

4. What are the most common reliability issues discovered during testing?

The most frequently discovered reliability issues include memory leaks leading to gradual performance degradation, improper error handling causing system crashes, database connection pool exhaustion, inadequate resource cleanup, and race conditions in concurrent operations. Network-related issues such as timeout handling and connection failures are also common, especially in distributed systems. Third-party service dependencies often introduce reliability challenges when external services become unavailable. Understanding these common patterns helps teams focus their testing efforts on the most likely failure points.

5. How should organizations prioritize reliability testing efforts with limited resources?

Organizations should prioritize reliability testing based on business impact and risk assessment. Critical system components that directly affect user experience or revenue should receive primary attention. High-traffic features and complex integrations typically warrant more extensive testing. Risk-based prioritization considers both the probability of failure and the potential consequences. Organizations should also focus on areas with historical reliability issues or recent code changes. Implementing automated testing for routine scenarios allows teams to allocate manual testing resources to high-risk areas. Starting with core functionality and gradually expanding coverage ensures that limited resources deliver maximum value.